Generative AI

- Bogdan Podaru

- Apr 6, 2022

- 3 min read

Deep generative models have gained a lot of interest because of their ability to generate highly realistic images. Generative Adversarial Networks (GANs) solve the generative task with two sub-models:

The generator model that is trained to produce novel images.

The discriminator model that is trained to distinguish real images (from the training set) from fake images (the ones we generate).

StyleGAN2-ADA Insight

The StyleGAN is an extension of the GAN architecture that is able to control the style of the generated image at different levels of detail by varying noise and the so-called style vectors.

StyleGAN2-ADA is an improved version of StyleGAN that is particularly useful when dealing with limited datasets due to a technique called Adaptive Discriminator Augmentation [1], which is used to enlarge the initial dataset by performing different augmentative operations: flipping along horizontal axis, rotating the image, scaling, changing the brightness, the contrast, the hue, saturation, etc.

This article discusses how to generate novel superheroes with an instance of StyleGAN2-ADA model.

Hardware Configuration for Training

8 Tesla V100 GPUs

500GB of SSD

32 GB of RAM

32 virtual CPUs

Dataset preparation

The dataset was downloaded from Kaggle [2]. The semi-automated procedure that was applied is outlined below.

1. All outliers have been removed. An image was considered an outlier if:

the background and the foreground were not clearly separated

the superhero did not present human-like features (e.g., ants, robots)

the color gamma was not cartoonish and was too realistic

2. The dataset was split into full-body and upper-body superheroes. The upper-body subset was selected as it had the most images and poses of superheroes, which were not as varied as those in the full-body subset.

3. The background was automatically removed to ease the training.

4. The aspect ratio of 1:1with resolution of 512 x 512 for each image was set.

5. Each image was saved as PNG with a white background.

Training Configuration

5 training cycles, 5000 kimg each

5 days using the specified hardware (this time includes instance interruptions and their resolutions).

RGB images with resolution 512 x 512 yielding a total of 28700647 parameters for the generator and 28982849 parameters for the discriminator.

StyleGAN Hyperparameters

mini batch size = 64

learning rate for both generator and discriminator = 25e-4

ADA target value = 0.6 (the system increases/decreases augmentation if it senses overfitting or underfitting during training but up to this target)

Evolution

In the early stages of training, our model was trying to concentrate noise into object artifacts. In subsequent stages, the model was concentrating these artifacts into larger ones until superheroes silhouettes were clearly distinguished. From that point on, optimization focus was on improving high-level features such as facial artifacts, shoulders, shadow casts, foreground separation, as well as distinguishing arms from the body and randomizing all these by creating different angles of views, different body positions, sometimes by enlarging images.

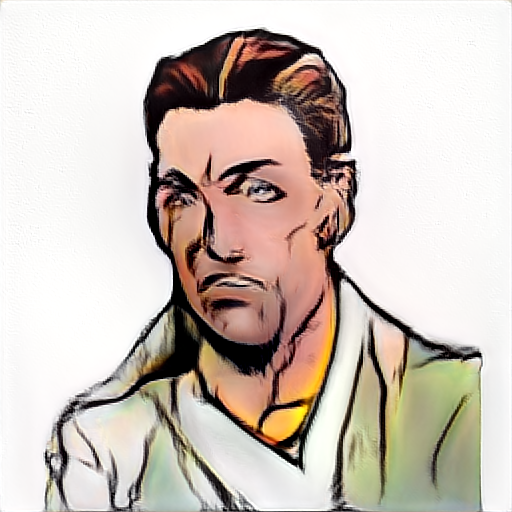

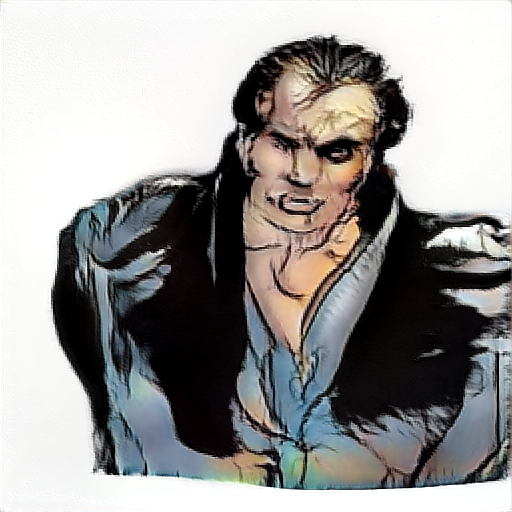

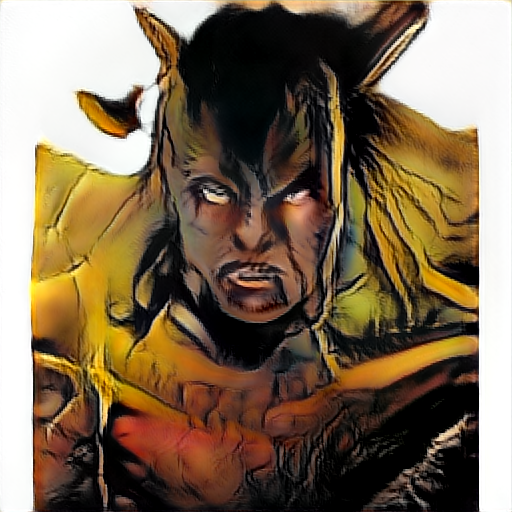

We can see that the model is capable of producing novel superheroes with high quality and good facial features accuracy. Check these guys out:

We have generated these images by choosing random seeds from the latent space. It can also be seen how the generative model is able to morph between related seeds (closed in the latent space). Think of morphing as of borrowing features from two different superheroes (e.g., the superhero will look like Batman and Superwoman's child if these two were chosen as seeds for generation). Check the animation bellow to understand how the model is randomizing features.

The model does not suffer from mode collapse (i.e., low diversity), although there are clearly a few samples better represented than others (e.g., samples in which we can clearly distinguish between arms and body or samples zooming on the face only). However, the model did suffer from training instability. It is known for GANs that there is an interval of epochs during training in there is an equilibrium between the generator and discriminator. After these epochs, the generator’s loss could increase so much that it will produce bad quality samples, samples that the discriminator could easily classify, thus pushing the discriminator’s loss down to 0. For this reason, as the best model for generating superheroes, we chose a model in which training was stable.

Training Manager

The instance we used for training presented interruptions during the optimization process, leading to a series of manual restarts in the training process. This was time-consuming given that the instance required monitoring and every restart required a few manual steps that extended the optimization time, thus increasing the overall cost of using the instance. To avoid this, we have developed a training manager that solves exactly these issues in a robust manner:

Training manager starts by checking for a new training request in a self-managed queue.

If there is an already started job that has not yet been completed, the training manager will occasionally check its status and progress.

If the instance was interrupted and the job was stopped, the manager will find where the training left off and restart from that point with the remaining kimg to train against.

Comments